Learn to Master

Start your tech career from SCRATCH by opting in for a data engineering course in pune

- Live Session

- 20 - Week Program

Time Span

⦿ 230+ Live Session

⦿ After Every Module mock

⦿ 2 hrs Daily

Process

⦿ Live Session

⦿ After the session recording uploaded on the same day

Structure

⦿ Mock After every modules

⦿ Real-time Projects after every module

⦿ Doubt Sessions & Assignments per week

Extra

⦿ Resume Building

⦿ Linkedin Profile

⦿ Interview Prep

⦿ Naukri Profile

About the data engineering course in pune

PG Program in Data Engineering in pune

- Duration 3-4 months

- Modules you will learn : Learn Azure Databricks, Azure Data Factory, and Synapse , Spark, PySpark, SQL and Python for data engineering

- Data Engineering job titles may include: Hadoop Developer, BI Developer, Quantitative Data Engineer, Search Engineer, Technical Architect, Big Data Analyst, Solutions Architect, Data Warehouse Engineer, Data Science Software Engineer, ETL Developer, to name a few….

- 99% Success Rate 🚀

Why Join Data engineering Course Online in, Pune

Communication Skills

Excellent Individualistic Communication in Interviews

Analytical Skills

we suggest that you need to understand and deeply analyze the necessities and needs of the customers. Therefore, analytical abilities and competencies are critical to successfully transform the design/code and construct a protracted-term profession in Salesforce.

Technical Skills

Apex Visualforce Javascript CSS HTML SOUL Apart from this, you ought to understand internet improvement tools like Git, Eclipse, ID, and Sublime

Problem-solving Skills and Logical Skills

We all recognize that no CRM platform is free from errors, insects, and problems. So, wonderful realistic understanding and problem-solving talents are important for Salesforce developers. Adequate understanding and quick hassle fixing will help keep the Salesforce platform going with the flow.

Data engineering Course Online

Data Engineering Training in Pune

Prominent Academy Azure Data Engineering Training in, Pune Joining a data engineering route may be a strategic skip for several reasons: Core to Data Science: Data engineering is critical to the data science technology expertise area, providing the backbone for information scientists and analysts to perform their work. Technical Challenge: This includes complicated programming and system design that may be intellectually stimulating and rewarding. High Demand: With the exponential growth of statistics, expert records engineers are in excessive demand at some point of various industries. Attractive Salaries: Data engineering is thought to supply competitive salaries. In India, for example, the average profit for a record engineer is spherical ₹7.3 lakhs constant with 12 months, with capacity to earn a splendid deal greater with experience. Career Growth: There’s a clear path for professional development, from getting right out of entry-level positions to senior roles, as you gain enjoyment and understanding. As for the gadget, recording engineers commonly use an entire lot of generation, such as but not limited to: Databases: like MySQL, PostgreSQL, and NoSQL databases along with MongoDB. Big Data Tools: together with Apache Hadoop and Apache Spark. Data Warehousing: answers like Amazon Redshift and Snowflake. ETL Tools: for fact extraction, transformation, and loading, along side Apache Airflow and Talend. Cloud Platforms: like AWS, Azure, and Google Cloud Platform for scalable data storage and processing. These devices are essential for constructing and retaining the infrastructure that allows facts series, storage, processing, and evaluation. Mastery of these gadgets, mixed with the information gained from a Data engineering course, can function you for a worthwhile profession in this immoderate-boom industry. This live teacher-led schooling software helps you work on LIVE Sessions in, pune

Key Highlights of the Azure Data engineering Courses in pune

In-Depth Communication

1 on 1 Coaching

Leaders in Industry

Become a Data Engineer Certified Professional using Microsoft Azure in Pune [Updated 2025]✅

Key Highlights

- Gain knowledge from experienced data experts

- 6 months work experience certificate

- Get average package up to 10+ LPA by becoming 3X cloud certified.

- Go above and beyond certification by creating a personal portfolio.

Important highlights

Data Engineering Course Syllabus in pune

1 : Introduction to Database (what is database & sql & create table syntax & insert data into it)

2 : SQL Datatypes (Number,Char,varchar2,Date,Interval data type)(default value concept)

3 : Data integrity constraints (Not null,Unique,primary,check,foreign)

4 : DDL commands(create,alter,Drop,comment,truncate,Rename) & DML(Insert,update,delete) commands & TCL commands(commit,Rollback)

5: SQL Operators (Arithmetic,Relational,logical,In,Between,Like,||,Is Null,Is Not Null)

NVL & NVL2 & Coalesce

6 & 7 : Aggregate Functions(Min,max,sum,count) & window functions (dense_rank(),rank(),row_number())

Group By & Having Clause & Order By

Trunc & round function

8: Subqueries (scaler,multi valued,multi column,nested subquery)

9: Introduction to joins (inner,left,right,full outer,cross,self)

10: Joins questions

11: Joins questions

12: string functions(substr,Instr,lower,upper,replace,ltrim,rtrim,distinct)

13: Case statement & CTE & Index &Set operators(Union,union all,minus,intersect)

SQL optimization techniques

Fact & dimension tables

Star schema &snowflake schema

14: SQL Interview questions

1 : Introduction to Linux

-Overview of Linux and its History

-File system structure

-Introduction to the terminal

-Basic Commands (date,cal,uname,ls,who,echo,file,last,tree)

2: Commands

– Vi editor

– Cat, tac, rev, touch, mkdir, rmdir, rm, cp, mv, cd, head, tail, more

3 : Commands

– diff, gzip, gunzip, tar, Soft link, Hard link , wc, pipe, cut, paste, tr, sort, uniq, split

4 : Commands

– grep, sed, Chmod, umask, chown, find, ping,df,du

1: Introduction to Python

Installating python and setting up the development environment

2: Data Types in Python Part 1

3: Data Types in Python Part 2

4: Data Types in Python Part 3

5: Operators in Python

Input function

6 , 7 & 8: Control Statements

-if statement

-if .. else statment

-if ..elif..else statment

-while loop

-for loop

-break statment

-continue statment

-pass statment

9: Functions in Python

-In built & user defined functions

10: Modules, Packages and libraries

11: Special Type of functions

12: Regular expression

date and time modules

13: Python programs practice

14 & 15: Pandas library in python

-

1: Hadoop intro

Hadoop vs Spark

Spark Architecture

2: Rdd introduction

Transformations

Spark internal work flow

3: Rdd ,map, flatmap, filter, ReduceBykey, groupBykey

DAG,Rdd Lineage

4: Rdd to dataframe

Dataframe intro

SparkSession vs SparkContext

csv data process

5: Spark Built in functions part 1

6: Spark Built in functions part 2

7: Spark date functions & windows functions

8: Spark json data processing in detail

9: Spark procces pandas & data cleaning

10: Spark process avro, parquet, orc in dpeth

Avro vs Parquet vs Orc file formats.

11: Spark Jdbc, oracle, mysql data process

12: Handling currepted records scenarios in details (Data Cleaning)

13: Data Migration

Spark Submit

14: Handle incremental data (Merge Upsert Concept in spark)

15: Spark performance tuning part 1

16: Spark performance tuning part 2

Day 17: Spark Scenario based Interview Questions

- Configure management groups

-

1: Introduction to Azure Cloud

– Overview of cloud computing and azure

– Understanding Azure services and solutions

– Creating an Azure Account and subscription

2: Azure Virtual Machine

3: Azure Storage

Blob Storage

ADLS Gen2

4: Azure Database

5: Azure Synapse

6 to 8: Azure Databricks & Azure Devops

-All the deployment Activities from building notebook to publish.

-Azure Key Vault

9 to 14: Azure Data Factory (ADF)

Activities:

-Copy Activity

-GetMetadata Activity

-ForEach Activity

-Lookup Activity

-If Condition

-Delete Activity

-Debug Until Activity

-Execute Pipeline Activity

-Notebook Activity

15: Azure Scenario Based Interview questions

1: History and Introduction to Snowflake.

- Snowflake history

- Introduction to virtual warehouses, databases, and schemas

2: Snowflake architecture and account creation.

- Snowflake architecture

- Setting up a Snowflake account and environment

- Steps to create a Snowflake account

3: Data Loading

- Loading data into Snowflake from various sources:

- Explanation of supported file formats (CSV, JSON, Parquet, etc.).

- Using Snowflake’s COPY INTO command to load data from cloud storage or local files.

4: Data Modeling

- Introduction to structured, semi-structured, and unstructured data.

- Creating tables and schemas in Snowflake:

- Syntax for creating databases, schemas, and tables.

5: Querying Data

- Writing SQL queries in Snowflake to retrieve and manipulate data:

- Basic SQL syntax for querying tables, filtering rows, and selecting columns.

- Performing simple calculations and transformations using SQL functions (joins, window functions).

6: Data Transformation

- Introduction to Snowflake’s data transformation capabilities using SQL:

- Overview of data transformation tasks such as filtering, aggregating, and sorting data.

- Understanding the role of SQL in ETL (Extract, Transform, Load) processes.

7: Security and Access Control

- Managing users, roles, and privileges in Snowflake:

- Creating and managing users, roles, and permissions.

8: Monitoring and Optimization

- Overview of performance optimization techniques for data loading, querying, and data modeling.

- Zero copy cloning

- Internal stage/ external stage

- Overview of Snowpipe

- Introduction to Snowflake’s documentation and online resources.

Data Engineering Course Online in pune

Joining a Data engineering route may be a strategic skip for severa motives:

Core to Data Science: Data engineering is critical to the data science technology expertise area, providing the backbone for information scientists and analysts to perform their work1.

Technical Challenge: This includes complicated programming and system design that may be intellectually stimulating and rewarding.

High Demand: With the exponential growth of statistics, expert records engineers are in excessive demand at some point of various industries.

Attractive Salaries: Data engineering is thought to supply competitive salaries. In India, for example, the average profit for a record engineer is spherical ₹7.3 lakhs constant with 12 months, with capacity to earn a splendid deal greater with experience.

Career Growth: There’s a clear path for professional development, from getting right out of entry-level positions to senior roles, as you gain enjoyment and understanding.

As for the gadget, recording engineers commonly use an entire lot of generation, such as but not limited to:Databases: like MySQL, PostgreSQL, and NoSQL databases along with MongoDB.

Big Data Tools: together with Apache Hadoop and Apache Spark.

Data Warehousing: answers like Amazon Redshift and Snowflake.

ETL Tools: for fact extraction, transformation, and loading, along side Apache Airflow and Talend.

Cloud Platforms: like AWS, Azure, and Google Cloud Platform for scalable data storage and processing.

These devices are essential for constructing and retaining the infrastructure that allows facts series, storage, processing, and evaluation. Mastery of these gadgets, mixed with the information gained from a Data engineering course, can function you for a worthwhile profession in this immoderate-boom concern.

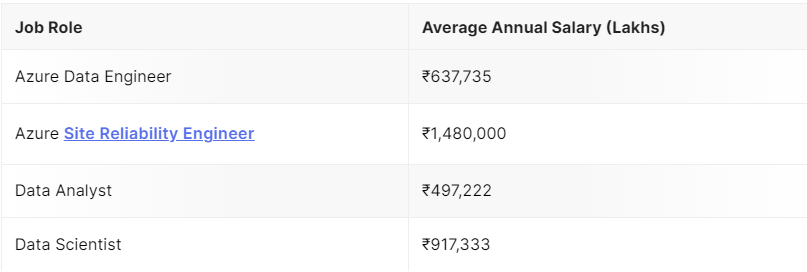

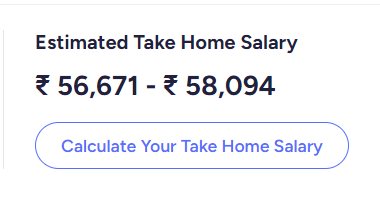

Azure Data Engineer Salary: Based on Employer

in ,pune

Source :

"Glassdoor"

A Data Engineer also depends on the agency you’re operating with. According to the records, organizations like Cognizant and Accenture Technology Solutions provide better salaries. we will teach you hands-on experience in our data engineering course in, Pune. Let’s see what some of the famous organizations provide:

Azure Data Engineer Salary: Based on Experience

Source :

Ambition box

Specific talents set in reality will increase your command for better salaries. There are specific competencies which might be majorly in demand when you are in the race to become a Data Engineer and together with your knowledge in those talents, you can proudly call for for higher salary. Let’s test a few of these talents and the average salary concerning: in our course we provide the avg salary for azure data engineer goes to 7 to 8 lpa

Similar Designations

When we have all data online it will be great for humanity. It is a prerequisite to solving many problems that humankind faces

- Robert Cailliau

Demand for Data engineering is growing, Pune

More Supply, Less Demand

The demand for data engineers compared to data scientists and data analysts is a mirrored image of the evolving wishes of the industry. As of recent statistics:

Data Engineers: They are in high demand due to the need for robust statistical infrastructure. Companies are searching out experts who can construct and control the systems that allow for efficient statistics collection, storage, and processing.

Data Scientists: While additionally in high call for, the focal point on data scientists has been severe, and there’s a growing awareness that without the proper statistical infrastructure that engineers provide, data scientists can’t carry out their roles effectively.

Data Analysts: They are essential for decoding facts and supplying actionable insights, however, their position is often taken into consideration entry-stage as compared to the greater technical roles of facts engineers and scientists.

Still not convinced???

REAL PEOPLE, REAL RESULTS

"Prominent" , Pune

Azure Data Engineering Training course in, Pune

FAQs

Data engineers work in diverse settings to construct structures that collect, control, and convert raw records into usable statistics for facts scientists and commercial enterprise analysts to interpret. Their closing aim is to make statistics reachable so that corporations can use it to assess and optimise their overall performance.

The average salary for a Data Engineer in India ranges from ₹4–35+ lakhs per annum, depending on experience and skills. Here’s a breakdown:

-

Freshers: ₹4–8 lakhs per annum

-

Mid-Level (2–5 years): ₹8–18 lakhs per annum

-

Senior-Level (5+ years): ₹18–35+ lakhs per annum

-

Lead/Principal Roles: ₹35–60+ lakhs per annum

With expertise in tools like Azure Databricks, Azure Data Factory, Synapse, Spark, and Python, you can command higher salaries and advance your career quickly.

A strong basis in arithmetic and records is important for facts engineers to analyse and interpret information correctly. Data engineers should be capable of paintings with database management systems like MySQL and Oracle.

Some of India’s maximum famous highest paying IT joins are Azure data engineers, full stack developers, cloud architects, blockchain engineers, information scientists, software program engineering managers, cyber security engineers and huge records engineers.

To some quantity sure, positive tasks required to be completed currently by means of information engineers in the future might be automated. However, that might truly suggest that the regions of obligation of data engineers might shift toward extra strategic obligations.

You will learn industry-leading tools and technologies, including:

-

Azure Databricks for collaborative analytics

-

Azure Data Factory for ETL and data integration

-

Synapse for cloud-based data warehousing

-

Apache Spark and PySpark for distributed data processing

-

SQL for data querying and manipulation

-

Python for data processing and automation

No prior experience in Data Engineering is required. However, basic knowledge of programming (preferably Python) and databases will be helpful. The course is designed to take you from beginner to advanced levels.

Yes, the course includes hands-on projects using real-world datasets. You will work on building data pipelines with Azure Databricks, Azure Data Factory, and Synapse, and analyze data using Spark, PySpark, SQL, and Python.

Yes, you will receive a Data Engineering Certification from Prominent Academy upon successful completion of the course. This certification is recognized by industry leaders and will boost your career prospects.

-

Azure Databricks is a collaborative analytics platform optimized for Apache Spark, used for big data processing and machine learning.

-

Azure Data Factory is a cloud-based ETL (Extract, Transform, Load) service used for data integration and orchestration.

In this course, you will learn how to use both tools together to build end-to-end data pipelines.

Yes, the course includes beginner-friendly modules for Python and SQL. You will learn how to use these languages for data processing, querying, and automation, even if you have no prior experience.

You will receive:

-

24/7 access to course materials

-

Personalized mentorship from industry experts

-

Dedicated doubt-clearing sessions

-

Career guidance and job placement support

You can enroll by clicking the Enroll Now button on this page. For more details, you can also Request a Free Demo or Talk to a Career Advisor.

The course fee is competitive and includes all learning materials, hands-on projects, and certification. For detailed pricing, please Download the Brochure or contact our support team.

We do not offer refunds once the course has been purchased. However, we are confident in the quality of our Data Engineering Course and provide comprehensive support to ensure your success. If you have any concerns or need assistance during the course, our team is available to help you through personalized mentorship, doubt-clearing sessions, and career guidance.

We encourage you to review the course curriculum, attend a free demo session, or speak with a career advisor before enrolling to ensure this course is the right fit for you.

Yes, many students transition to Data Engineering from non-technical backgrounds. The course starts with foundational concepts and gradually progresses to advanced topics, making it accessible to everyone. However, a willingness to learn programming and data concepts is essential.

Yes, you’ll learn how to deploy and manage data pipelines using Azure Data Factory and Azure Databricks. You’ll also gain hands-on experience with Synapse for cloud-based data warehousing.

If you’re interested in building data infrastructure, working with big data tools, and solving real-world data problems, this course is perfect for you. You can also Request a Free Demo or Talk to a Career Advisor to get more clarity.

Absolutely! The projects you work on during the course are designed to be industry-relevant. You can showcase them on your resume, LinkedIn profile, and portfolio to stand out to potential employers.

The demand for Data Engineers is growing rapidly, with companies across industries investing in data infrastructure. Skills in Azure Databricks, Azure Data Factory, Spark, and Python are highly sought after, making this the perfect time to start your career in Data Engineering.

Application Form

Get a Live FREE Demo

We give free career counselling that you don’t want to miss.

Speak with our Advisor to learn more about the courses.

We won’t spam you with emails or call you a hundred times. Let us know what you are looking for, our team will reach out to schedule time with you.